Highly-Performant HTTP Stack for Clojure

Key Takeaways

- Donkey is a new Clojure open-source HTTP library, designed for ease of use and performance.

- Identifying the strengths and weaknesses of a language helps with design decisions.

- Clojure frees developers from the perils of writing concurrent programs, but at a price.

- When concurrency is not a factor, consider using Java.

- Experimenting, iterating, and frequently measuring is key to achieving optimal results.

Donkey is the product of the quest for a highly performant HTTP stack aimed to: (1) scale at the rapid pace of growth we have been experiencing and (2) save us computing costs.

Though our implementation is still in its early stages, with limited production mileage, we’ve seen such impressive numbers in our benchmark testing that we couldn’t keep it to ourselves.

Donkey highlights:

- Built on Vert.x – a mature, battle-tested, highly performant toolkit for building reactive applications on the JVM.

- Simple API.

- Works as a drop-in replacement for any Ring-compliant server.

- Outperforms the library we’ve been using at AppsFlyer.

- Open-source.

In this article, we’ll briefly outline AppsFlyer’s use-case and our growing need for a library like Donkey. Then we’ll dig deeper into the benchmarks we tested to get a sense of Donkey’s performance.

Finally, we will discuss Clojure and immutability, Netty, and thread locality, and explain some of the design decisions we took.

The Web Handler

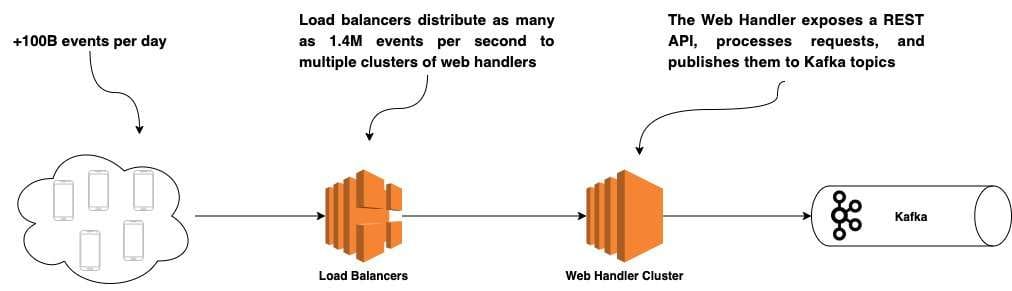

AppsFlyer is the market leader in real-time mobile attribution SaaS solutions. This means we handle a lot of traffic – more than 100 billion events per day, to be precise. Managing this in real-time means we have to process every one of those events – fast. I joined AppsFlyer to form a new team that would take ownership of the attribution flow’s front line. The team is responsible for handling this massive traffic, both efficiently and in a cost-effective manner.

The “Web Handler” is a Clojure service that handles all events coming from the AppsFlyer SDK, installed on more than 90% of mobile devices worldwide.

It uses a well-known Ring-compliant HTTP server and client library. Ring is the de-facto standard for HTTP servers in the Clojure universe. We have experienced exponential growth in traffic over the last few years, and the solution that once served our needs was just not cutting it anymore. We decided it was time to explore other solutions that would scale better as we continued to grow while cutting our AWS EC2 cost at the same time.

Always Measure

Throughout the process, we used TechEmpower to benchmark and compare different implementations. TechEmpower lists performance comparisons of an impressive number of web framework benchmarks in every language imaginable.

Benchmark setup:

- Hardware

2.3 GHz 8-Core Intel Core i9MacBook Pro with 16 GB 2400 MHz DDR4 SDRAM - Software

- Plaintext and JSON serialization benchmarks (from TechEmpower)

- reitit for routing

- jsonista for JSON serialization

Indicators:

- Our key indicators were throughput and latency

- The main focus was on the relative performance of each library rather than the absolute values, due to large variances. Therefore, many measurements were taken and averaged to eliminate anomalies to the greatest extent possible.

Our current library’s benchmark results were used as the baseline for our comparisons. Although we’ve seen it reach up to ~100K and ~50K requests per second (rps) in the plaintext and JSON benchmarks, the vast majority of the time it demonstrated performance in this neighborhood:

| Benchmark | Requests/sec | Transfer/sec | Latency (99%) | Total requests |

| Plaintext | 61,359 | 7.78MB | 627.07ms | 924,929 |

| JSON serialization | 43,327 | 6.94MB | 24.12ms | 654,233 |

TechEmpower benchmark – 15-second run

First Iteration

We planned to use Netty as the server implementation and wrap it in a Clojure API. The concern was how a Clojure interface would impact server performance, given the thread-safety model used by Clojure: immutability.

Immutability

Clojure makes writing concurrent applications easy. It frees the developer from the implications of sharing state between threads. It does so by using immutable data structures, as described by Rich Hickey in his talk The Value of Values:

If you have a value, if you have an immutable thing, can you give that to somebody else and not worry? Yes, you don’t have to worry. Do they have to worry about you now because they both now refer to the same value? Anybody have to worry? No. Values can be shared.

Because all objects are immutable, they can be concurrently accessed from multiple threads without fear of lock contention, race conditions, proper synchronization, and all the other “fun” stuff that makes writing concurrent programs so difficult to get right.

The downside is that every mutating operation produces a new object with an updated state. An inefficient implementation would cause a great deal of CPU time to be wasted on copying and creating new objects and, as a result, longer and more frequent GC cycles.

Fortunately, Clojure uses a Hash Array Mapped Trie (HAMT) to model its data structures internally. By sharing structures that do not change, and copying only what does, it maintains immutability and thread-safety – and does so at a minimal cost.

Another factor that can hinder performance is the wide use of the volatile fields and atomic references that are still required to maintain the Java Memory Model semantics of “happens before.” The dynamic runtime of the language and features like laziness are just some of the elements that require synchronization within the JVM.

Thread-locality

Netty uses a different approach to thread safety: an event-driven model, with the concept of an event loop at its core. Users write handlers that are notified of incoming requests and outgoing responses. Both the incoming and outgoing handlers will always be called on the same event loop and, therefore, the same thread. As long as the handlers don’t create threads that mutate their state, the state is not shared and there is no need for synchronization.

Netty Event Loop

Minimal Benchmark

To assess the overhead associated with Clojure, we used the JSON serialization test case. For the sake of simplicity, we used Vert.x rather than Netty directly, and we wrote the minimal amount of code to return a response, as shown below. To emphasize the overhead added when mutating objects, we used a slightly contrived way of returning a response using assoc.

(fn [^RoutingContext _ctx]

(assoc {} :headers {"Content-Type" "application/json"}

:status 200

:body (json/write-value-as-string {"message" "Hello, World!"}))))Clojure request handler

Both implementations use Jackson for serialization, so the real difference is in the relative overhead of Clojure over Java. In our benchmarks, the Clojure version underperformed by about 9-13% when comparing peak throughput.

| Implementation | Requests/sec | Transfer/sec | Latency (99%) | Total requests |

| Vert.x | 252,825 | 37.13MB | 18.86ms | 3,817,088 |

| Vert.x + Clojure | 226,643 | 32.42MB | 25.63ms | 3,420,219 |

TechEmpower – JSON serialization – 15-second run

We did not see the same degradation with lower-rate throughput. Nevertheless, the comparison shows that at rates reaching the server’s capacity, there is a significant decrease in performance.

The next round of tests set to gauge Clojure overhead, compared different middleware implementations. The following table shows the results of benchmarking “off-the-shelf” middleware written in Clojure from ring-clojure, versus the same Ring-compliant middleware implemented internally in Java. Benchmarks were performed using Criterium.

| Middleware | Ring (Clojure) | Clojure + Java | Latency Decrease |

| Parsing query parameters | 7.943209 µs | 1.024412 µs | 87.1 % |

| Parsing JSON body | 31.706660 µs | 21.677851 µs | 31.63 % |

| Serializing JSON body | 36.464090 µs | 12.789686 µs | 64.93 % |

Our tests showed a clear decrease in throughput and an increase in latency at full capacity. These observations led us to a two-layer design similar to Linux’s user and kernel space. The Clojure layer (user space) will be limited to the API exposed to the user, whereas the Java layer (kernel space) will handle the integration with Netty.

Results of the POC (aka “Green Yeti”)

The results from our proof of concept were reassuring. We saw a 350-400% increase in throughput and a 50% decrease in latency:

| Benchmark | Requests/sec | Transfer/sec | Latency (99%) | Total requests |

| Plaintext | 242,859 | 43.54MB | 311.41ms | 3,665,662 |

| JSON Serialization | 149,663 | 29.69MB | 13.40ms | 2,259,769 |

TechEmpower benchmark – 15-second run

To see how the design fared in a more realistic scenario, we wrote a small Clojure service and benchmarked it using wrk2. As expected, throughput was significantly lower than the previous benchmarks at 75-80k rps and 48-171 ms latency, but promising.

Next Iteration – Donkey

The scope of the project began to expand and include more and more elements requiring implementation. For the next iteration, we replaced Netty with Vert.x, giving us a higher level of abstraction with fairly low overhead.

We maintained the same core design as in the POC and extended it with:

- Minimalistic API: We kept the API very simple and closely resembling the current one, to facilitate easy migration.

- Extensible design: The design allows for implementations other than Ring to be added in the future.

- Integration with routing libraries: The library exposes a routing API. However, support for integration with existing routing libraries was added, to facilitate easy migration.

- Vert.x encapsulation: There is full encapsulation of Vert.x as an implementation detail. This allows us to replace it in the future if we want to and gives us control over the functionality we want to expose.

- Non-blocking behavior by default: The API promotes writing non-blocking application code. Support was added for legacy services that block, to facilitate easy migration.

End Results

The results were better than we had hoped. We ran the TechEmpower plaintext and JSON serialization on all the Clojure libraries featured in TechEmpower. Donkey had the highest throughput (with 811K rps) in the plaintext benchmark and the second-highest (with 190K rps) in the JSON serialization.

Plaintext

JSON Serialization

Our design is effective because it leverages the relative strengths of its components in different places. As engineers, we have many tools at our disposal. Whether that be a choice of language, framework, protocol, or design pattern, it is important to select the right tool for the job. We started by selecting a mature and proven framework with the right amount of abstraction to build on. We recognized the different concurrency models used by Netty and Vert.x as opposed to Clojure and were not shy to use Java where concurrency wasn’t a factor and we could save on the overhead associated with immutability and synchronization.

Conclusion

Benchmarking is not an exact science. Many elements beyond our control play a part and affect the results. The highest throughput we saw from Donkey was 996K and 207K rps in the plaintext and JSON serialization benchmarks, respectively. There is no doubt we won’t see these kinds of numbers in a real production setting. However, we demonstrated the importance of understanding the internals of the language, recognizing its relative strengths and weaknesses, and (last but not least) taking the time to conduct experiments to achieve the best results.

Our road map includes plans to integrate Donkey in all AppsFlyer services that expose a REST API, add support for HTTP2, schema validation, OpenAPI3 integration, JWT validation, health checks, and more.